Correcting Learning-based Perception for Safety

Abstract

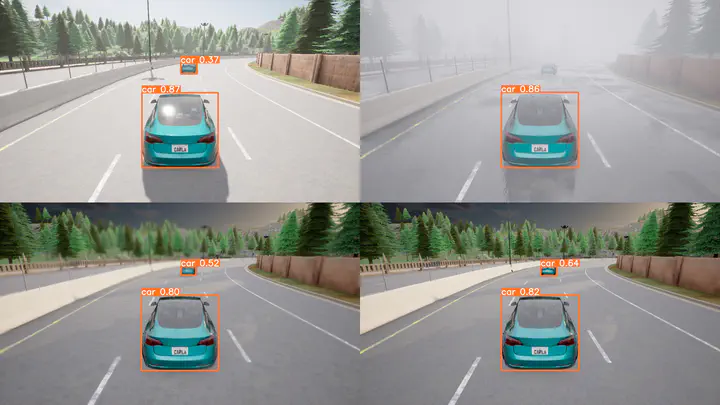

Learning-enabled perception is important in many autonomous systems. Unlike traditional sensors, the boundary where ML perception does or does not work is poorly character- ized. Incorrect perception can lead to unsafe or overtly conser- vative downstream control actions. In this paper, we propose a two-step strategy for correcting ML-based state estimation. First, an offline computation is used to characterize the uncertainties resulting from the ML module’s state estimation, using preimages of perception contracts. Second, at runtime, the a risk heuristic is used to choose particular states from the uncertain estimates to drive the control decisions. We perform extensive simulation- based evaluation of this runtime perception correction strategy on different vision-based adaptive cruise controllers (ACC modules), in different weather conditions, and road scenarios. Out of 45 ACC scenarios where the original perception-based control sys- tem using Yolo and LaneNet led to safety violations, in 73% of the scenarios, our runtime perception correction preserved safety; our method wouldn’t be able to recover 27% of the scenarios where the construction of the preimages of perception contracts is not fully conformant. Further, our runtime perception correction strategy is not overly conservative—on the average only a 2.8% increase in completion time is experienced in the corrected scenarios, with mild interventions.